Rendering - Web performance with Steve Kinney (Frontend Masters)

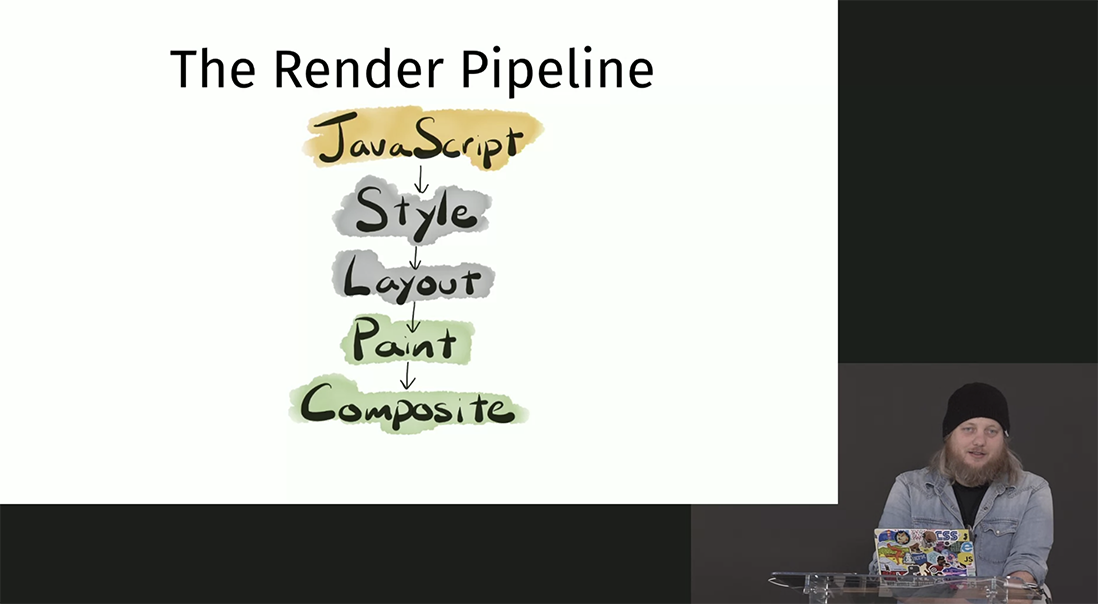

The steps to create a web page are: create the DOM, create the CSSOM, merge them into a render tree, calculate the layout based on the render tree and paint the results on the screen.

While the DOM and CSSOM can be reused and will mostly stay the same unless you add or remove a bunch of elements to the page or load new stylesheets, the layout or reflow step will happen again whenever you use Javascript to do things like changing the classes of the elements or adding inline styles. This is happening because for example changing the class of an element might mean that the element needs to be a different size or show up in a different place on the page. So the browser has to calculate the geometry of such changes.

As such reflows are CPU intensive and they are blocking operations. Everything stops during reflows meaning users cannot do simple things like inputing data or scrolling. This is many times worse if it happens in a loop. That’s why reflow causing operations like adding new DOM nodes should be batched and done in a single take. In general, you want the number of reflows as well as the time for each reflow to be minimal. Also keep in mind that any reflow is always followed by a paint and painting is even more expensive.

Here’s an incomplete list of things causing reflows:

- resizing the window

- changing the font

- content changes

- adding or removing a stylesheet

- adding or removing classes

- adding or removing elements

- changing orientation

- calculating size or position

- changing size or position

Ways to avoid reflows

- change classes at the lowest levels of the DOM tree to limit the impact

- avoid repeatedly modifying inline styles

- trade smoothness for speed if you’re doing a Javascript animation

- avoid table layouts

- batch DOM operations

- debounce window resize events

Layout thrashing

You can see the comparison between doing alternate reads/writes in a loop vs batching all reads and doing all writes after in this codepen I’ve forked and modified from Steve Kinney. I’ve added some performance markers so that you can just read the label and see exactly how long it took.

const button = document.getElementById('double-sizes');

const boxes = Array.from(document.querySelectorAll('.box'));

// First try, read/write -> bad

const doubleWidth = el => {

const width = el.offsetWidth;

el.style.width = `${width * 2}px`;

}

button.addEventListener('click', (event) => {

performance.mark('start');

boxes.forEach(doubleWidth);

performance.mark('end');

performance.measure('Ella', 'start', 'end');

const [measure] = performance.getEntries('Ella');

console.log(measure);

});

//Second try, batch reads, batch writes -> 35 times better ~ 0.6ms

button.addEventListener('click', (event) => {

performance.mark('start');

const widths = boxes.map(box => box.offsetWidth);

boxes.forEach((box, i) => box.style.width = `${widths[i] * 2}px`);

performance.mark('end');

performance.measure('Ella', 'start', 'end');

const [measure] = performance.getEntries('Ella');

});

// Third try, using fastdom, about twice as fast as batching ~ 0.27ms

const doubleWidth = el => {

fastdom.measure(() => {

const width = el.offsetWidth;

fastdom.mutate(() => el.style.width = `${width * 2}px`)

});

}

button.addEventListener('click', (event) => {

performance.mark('start');

boxes.forEach(doubleWidth);

performance.mark('end');

performance.measure('Ella', 'start', 'end');

const [measure] = performance.getEntries('Ella');

});

In the above example batching the reads/writes was 35 times faster than alternating read/writes.

The browser tries to queue any reflows by default. When you’re reading a reflow causing property like the offsetWidth that’s what the browser will try to do. But if you’re immediately following that line with a write like updating the width of the element the browser is forced to stop and measure things and process that reflow so that it can correctly update the element, even if it doesn’t immediately paint the change. This is called layout thrashing or forced synchronous layout meaning the browser cannot just queue things for later, it’s forced to do the work now, synchronously.

Conversely if you’re first reading the widths of all of the elements and only afterwards updating them, the browser can queue all of the reads, as well as the writes and perform them in a single go.

Frameworks

Frameworks like React will help you out by taking care of some of these things. However you do take a cost hit with the overhead of the framework code itself. Depending on the specific case the trade-off is most of the time worth it since it enables a higher developer productivity.

Keep in mind though that stuff like layout thrashing can still happen in a React app so you have to always be checking and measuring to ensure performance problems don’t sneak up on you.

Painting

Triggering a layout will always trigger a paint because things have changed and they now look different.

But there will be paints that were not triggered by a layout. For example chaning the background color of an element will only trigger a paint.

The compositor thread

There are multiple threads that the browser runs. There’s a thread for the browser UI. Then we get a separate thread for each of the open tabs we have. Each of these threads are the main threads for those specific tabs and the ones we care about most. It is were our JS is run, layout happens and other things we usually work on optimizing.

Then there’s another thread that uses the GPU of the machine to composit what’s called layers. The painting process generates bitmap images that need to be composed together in order to show the webpage to the user and those bitmaps are the layers that go into the compositor thread.

Layers

By default we have one single layer, the one that holds the entire page. Whenever we position something with position: fixed for example, that element is taken out of the layer of the page and put into its own layer, similar to what you’d have in Photoshop.

Sometimes that can be helpful because it saves the browser from having to do a lot of repainting or what is called paint storming. For example if you had a sidebar menu animating while opening, by default the browser might have to repaint the whole page as the element moves. By moving the menu to its own layer the browser will only paint the actual changing menu and moreover this will be handled by the GPU which is much better at handling this task.

You cannot force a browser to move an element to its own layer. But you can give it hints. In the past this would be hacked by setting a transform: translateZ(0) on an element. But nowadays there’s a formal hint, will-change: transform. Instead of transform you can give it what you need.

Do keep in mind that this is a hint and the browser can disregard it.

Of course there’s downsides to overdoing this. The performance boost you get with extra layers comes at the cost of increased memory usage. So ideally you’d only move elements to their own layers sparringly and return them back when it’s not needed anymore.

Let’s take the example of the sidebar menu that slides from the side. We’d have a button that when clicked it would animate the menu into opening. To make the animation smoother and offload the work to the GPU ideally we’d put the menu into its own layer and when the animation is done we’d return it back into the main layer of the page.

To achieve this we’d setup an event listener for mouseenter to add a will-change: transform to the element. When the user clicks the button, the element will already have moved to its own layer, just before the click so there’s no stuttering in the animation. We’d also set a mouseleave listener that undoes the new layer by setting will-change to auto. And also do the same thing for an animatioend or transitionend event, depending on the use case.

Tools

Chrome Dev Tools will give you access to a Layers tab and to a Rendering tab that can be used to visualize painting and layers in real time and are very helpful in debugging potential bottlenecks.